HAppA: A Modular Platform for HPC Application Resilience Analysis with LLMs Embedded

Overview of the framework

Overview of the frameworkAbstract

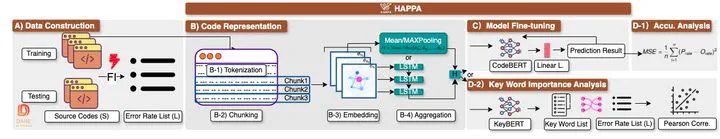

High-performance computing (HPC) systems are increasingly vulnerable to soft errors, which pose significant challenges in maintaining computational accuracy and reliability. Predicting the resilience of HPC applications to these errors is crucial for robust code protection and detailed resilience analysis. In this study, we present HAppA, a modular platform designed for HPC Application Resilience Analysis. Embedding Large Language Models (LLMs), HAppA addresses understanding the context information of long code sequences typical in HPC applications. HAppA implements a novel code representation module that chunks the code into fixed-size segments and aggregates the embeddings of these segments. Three aggregation methods have been explored – MeanPooling, MaxPooling, and LSTM-based techniques. We built a DAtaset for REsilience analysis using Fault Injection (FI), named DARE. Using our DARE dataset, HAppA is trained for regression prediction tasks. Our evaluation results demonstrate the predictive accuracy of HAppA compared to other models, particularly noting that the LSTM-based aggregation method - HAppA-LSTM - achieves a mean squared error (MSE) of 0.078 for SDC prediction, surpassing the existing state-of-the-art PARIS model, which recorded an MSE of 0.1172. Additionally, HAppA with the KeyBERT model extracts a list of key words representing the source code. A comprehensive importance analysis of these key words further elucidates the code patterns contributing to the error rate. These findings highlight the effectiveness of HAppA in analyzing the resilience of HPC applications and establish a new benchmark for predictive accuracy in resilience.

Type

Publication

In 2024 43rd International Symposium on Reliable Distributed Systems (SRDS)

Click the Cite button above to to import publication metadata into your reference management software.